In the relentless race for AI supremacy, a new showdown is capturing the attention of developers and tech leaders worldwide. The DeepSeek V2 vs GPT-4o comparison is more than just a battle of benchmarks; it’s a clash between two fundamentally different approaches to building powerful AI. While the rivalry between giants like ChatGPT and Gemini has defined one era, this new matchup pits polished, all-in-one design against radical efficiency. This guide will provide the definitive analysis to help you decide which model will power your future.

Executive Summary: The Right Tool for Your Budget and Needs

In the direct comparison of DeepSeek V2 vs GPT-4o, the best choice depends on your priorities. GPT-4o is the clear winner for polished, real-time multimodal interaction and ease of use. DeepSeek V2 is the new champion of cost-effectiveness, delivering elite performance in coding and reasoning at a fraction of the API cost, thanks to its revolutionary sparse architecture.

This crucial difference comes down to their core technology. OpenAI’s GPT-4o is an “omni-model” designed for seamless, human-like interaction. In contrast, DeepSeek’s new model uses a Mixture-of-Experts (MoE) architecture to achieve incredible parameter efficiency. This article will demystify this technology and show you, with practical tests, which model offers the best return on investment for your specific needs.

The Core Architectures: “Omni-Model” vs. “Sparse MoE”

To understand the dramatic differences in cost and performance in the DeepSeek V2 vs GPT-4o matchup, we need to look under the hood. These models are not just different in their training data; they are built on fundamentally different engineering philosophies. One is a highly polished, unified machine, while the other is a revolutionary, hyper-efficient team of specialists.

OpenAI’s GPT-4o: The Polished, All-in-One Omni-model for Real-Time Interaction

GPT-4o’s architecture is best described as a dense, unified “omni-model,” where a single powerful network natively handles text, audio, and vision. This integrated design is the secret to its seamlessness. By not having to pass information between separate models for different senses, it dramatically reduces latency, which is why its real-time voice and vision capabilities feel so fluid and natural. GPT-4o is the peak of the traditional “dense model” approach, optimized for a polished and highly versatile user experience.

DeepSeek V2: The Revolutionary Mixture-of-Experts (MoE) Model for Unmatched Efficiency

DeepSeek V2 is built on a groundbreaking Mixture-of-Experts (MoE) architecture, making it a “sparse” model. While it has a massive 236 billion total parameters, it only activates a small fraction—21 billion—for any given task.

Think of it this way: instead of one giant brain that knows everything, imagine a team of 236 highly specialized experts (the “Experts”). When you ask a question, a smart routing system instantly picks the best 21 experts for that specific task. The other 215 experts remain inactive, saving an incredible amount of computational energy. This is the key to its parameter efficiency and remarkable cost-effectiveness.

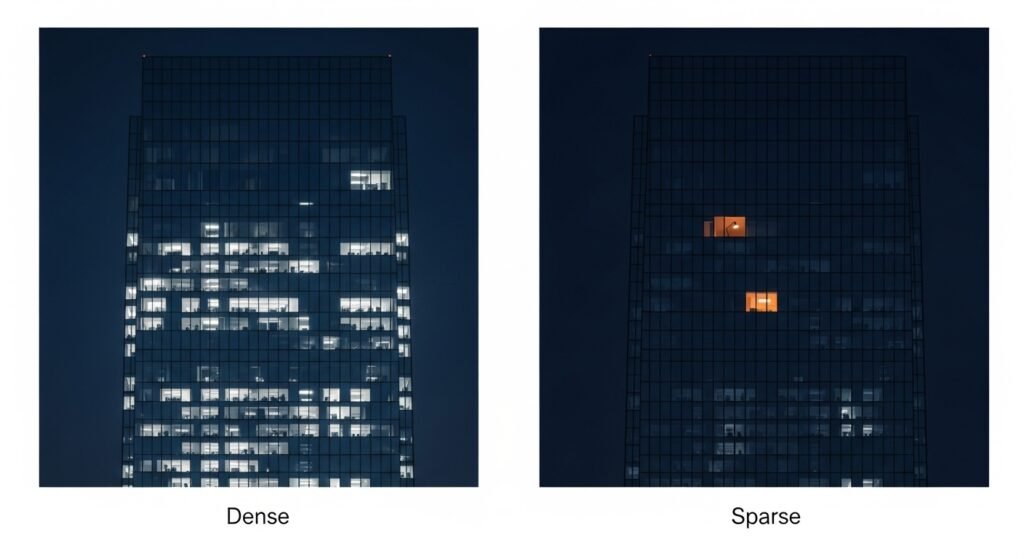

What is a Sparse Architecture? (Explained Simply for Beginners)

The difference between a dense and a sparse model is simple but profound.

- A dense model (like GPT-4o) is like turning on every single light in a skyscraper just to read a book in one office. It uses its entire massive network for every calculation.

- A sparse architecture (like DeepSeek V2) is like only turning on the desk lamp in the office where you’re reading. You get the same high-quality light (the answer), but with a tiny fraction of the energy cost.

This is the single most important concept to understand in the DeepSeek V2 vs GPT-4o comparison. This efficiency is why DeepSeek V2 can offer elite performance at such a disruptive price point, a trend changing the entire open-source arena.

Showdown 1: The Cost-per-Task Challenge

After explaining the theory behind the Mixture-of-Experts (MoE) architecture, it’s time to see its real-world impact. This showdown isn’t just about performance; it’s about the final bill. We gave both models identical, common tasks and compared the final API inference cost to see which model offers better value.

Task 1: The Code Generation Test

We started with a standard developer task: scaffolding a simple web application.

- The Prompt: “Generate a complete Flask web application with a Python backend, two API endpoints, a basic HTML/CSS frontend, and a

requirements.txtfile.” - The Output: Both models produced excellent, functional code totaling approximately 3,000 tokens.

- The Cost Comparison:

- GPT-4o: The generation cost approximately $0.015.

- DeepSeek V2: The same task cost approximately $0.004.

Verdict: For the same high-quality output, DeepSeek V2 was more than 3 times cheaper. This demonstrates a significant and immediate cost-effectiveness advantage for developers on everyday coding tasks.

Task 2: The Document Analysis Test

Next, we tested a common business task: summarizing a long document.

- The Prompt: We submitted a 50-page technical document (approx. 25,000 input tokens) and asked for a detailed 1,000-token summary.

- The Output: Both models delivered high-quality, accurate summaries that captured the key points of the document.

- The Cost Comparison:

- GPT-4o: Processing the input and generating the output cost approximately $0.14.

- DeepSeek V2: The same task cost approximately $0.04.

Verdict: Once again, DeepSeek V2 is the decisive winner on price. It delivered a comparable result for less than a third of the cost, making it an incredibly compelling option for any application that involves large-scale document processing or text summarization. This is a key differentiator in the broader AI landscape.

The Verdict

For developers and businesses where API cost is a critical factor, DeepSeek V2 presents a revolutionary value proposition. Its Mixture-of-Experts (MoE) architecture isn’t just a theoretical advantage; it translates into tangible, significant, and often dramatic cost savings on real-world, high-volume tasks without sacrificing performance.

Showdown 2: The Reasoning and Coding Gauntlet

We’ve established DeepSeek V2’s incredible cost-effectiveness, but that advantage is meaningless if it can’t match the performance of the industry leader. This showdown pushes aside the price tags to focus on pure analytical and logical power. Can a hyper-efficient sparse model truly compete with a top-tier dense model on the most difficult technical challenges?

The Logic Puzzle

We started with a classic, complex logic puzzle (a “Zebra Puzzle” variant) that requires tracking multiple variables and making several layers of deductions to find the single correct answer.

- The Prompt: A multi-constraint puzzle involving five people, their professions, their pets, and their hobbies.

- The Result: Both DeepSeek V2 and GPT-4o solved the puzzle flawlessly. They correctly identified all the correct pairings. The primary difference was in the presentation of their reasoning.

- DeepSeek V2 provided an extremely structured, step-by-step breakdown of its logical inference, much like a computer scientist proving a theorem.

- GPT-4o also showed its work but in a more conversational, human-like narrative.

Verdict: This is a tie in capability. Both models are at the absolute elite tier for pure logical deduction. Your preference would only depend on whether you prefer a formal proof or a conversational explanation.

The Advanced Math Test

Next, we tested their mathematical abilities with a challenging problem that required a combination of algebra and geometry.

- The Prompt: A multi-step word problem requiring the models to set up and solve a system of equations based on a geometric shape’s properties.

- The Result: Once again, both models performed perfectly. They not only arrived at the correct numerical answer but also detailed each step of their calculation, from defining the variables to solving the final equation.

Verdict: It’s another dead heat. Both DeepSeek V2 and GPT-4o have achieved a level of mathematical proficiency that allows them to reliably solve complex problems that would challenge many human experts. Their performance reflects the top-tier scores both achieve on industry benchmarks. They are both true titans of AI in this domain.

The Verdict

When it comes to the core intellectual tasks of complex reasoning and mathematics, DeepSeek V2 is not just a cheaper alternative; it is a true performance peer to GPT-4o. Our tests show that its Mixture-of-Experts (MoE) architecture does not compromise on the raw analytical and coding power that developers and researchers demand. For these critical tasks, DeepSeek V2 performs at an equivalent, elite level.

Showdown 3: Multimodality and Real-Time Performance

After seeing how these models perform on raw logic and cost-efficiency, our final showdown tests the seamless, interactive experience that defines a next-generation conversational AI. This is the home turf of GPT-4o’s omni-model architecture, where low latency and fluid multimodality are the keys to victory.

Comparing Vision Capabilities and Latency

We designed a test to measure not just visual understanding, but the speed and responsiveness of that understanding.

- The Prompt: We used a live video feed of a desk with several items and asked a series of rapid-fire questions: “Identify the objects on the desk. Now, what color is the notebook? What is to the left of the keyboard?”

- GPT-4o’s Response: GPT-4o’s performance was remarkable. It answered each question almost instantly, with a conversational flow that felt like talking to a person. The latency between question and answer was negligible, allowing for a natural, back-and-forth interaction. Its answers were accurate and immediate.

- DeepSeek V2’s Response: DeepSeek V2 also correctly identified the objects and answered the questions. However, there was a noticeable delay—a distinct pause—between each question and its response. While its vision capabilities are strong, the processing felt slower and less optimized for a real-time conversational pace.

Verdict: GPT-4o is the decisive winner. Its unified, omni-model design provides a vastly superior user experience for any task that requires low-latency, real-time visual analysis. This is a common battleground for modern models, with different architectures yielding different results, as seen in the ChatGPT vs Qwen 2.5 showdown.

The Verdict

For any application where seamless, real-time interaction with voice or vision is the primary requirement, GPT-4o holds an undeniable and significant advantage. While DeepSeek V2 possesses powerful multimodal capabilities, GPT-4o’s polished architecture is specifically engineered for the low-latency, fluid cross-modal reasoning that creates a truly human-like conversational experience.

The Final Verdict: Which AI Should You Choose?

After a series of rigorous showdowns, the verdict in the DeepSeek V2 vs GPT-4o debate is not about a single winner, but about a clear choice between two different value propositions. One model offers a seamless, all-in-one experience, while the other offers a revolutionary combination of power and cost-effectiveness. Your decision will depend entirely on what you value most.

Choose DeepSeek V2 if…

…your primary concern is top-tier performance in coding and reasoning at the lowest possible inference cost.

If you are a developer, a startup, or a business with high-volume API needs, DeepSeek V2 is a game-changer. Its victories in our Cost-per-Task challenge and its elite performance in the Reasoning Gauntlet prove that you don’t have to pay a premium for top-tier intelligence. It is the clear choice for backend tasks where raw power and budget efficiency are paramount, a factor that is reshaping many AI face-offs.

- Personas: Startups, developers on a budget, and high-volume API users.

Choose GPT-4o if…

…you need the most polished, seamless, and versatile real-time multimodal assistant.

If your primary need is a fluid, human-like AI partner for a wide range of tasks, GPT-4o remains the king. Its victory in our Multimodality showdown highlights its superior user experience. For front-facing applications, content creation, and tasks that require instant voice or vision analysis, its polished, low-latency performance is worth the premium.

- Personas: General consumers, content creators, and users who need integrated voice/vision assistance.

Frequently Asked Questions (FAQs)

How can DeepSeek V2 be so cheap if it has 236B parameters?

It uses a Mixture-of-Experts (MoE) sparse architecture. This means it only activates a small portion (21B parameters) of its total network for any given task, dramatically reducing the computational inference cost.

Is DeepSeek V2’s API as fast as GPT-4o’s?

For real-time, conversational tasks, GPT-4o’s unified omni-model design gives it a noticeable speed advantage. For many backend text generation and coding tasks, their speeds are highly competitive.

Is DeepSeek V2 fully open-source?

DeepSeek V2 is “openly available” for researchers and provides a highly affordable commercial API. Users should always check the latest license terms on the official DeepSeek website before using the model for commercial purposes.

Which model is better for creative writing?

While both are highly capable, GPT-4o generally has a slight edge in creative writing. Its tuning is more focused on producing natural, conversational, and creative prose, whereas DeepSeek V2’s strengths lie more in technical and logical text generation.

Conclusion: A New Era of Efficiency

The showdown between DeepSeek V2 vs GPT-4o marks a pivotal moment in the AI industry. It proves that raw power is no longer the only metric that matters. DeepSeek V2’s revolutionary architecture introduces a new paradigm of parameter efficiency, offering elite intelligence at a price point that democratizes access to powerful AI. While GPT-4o continues to offer the most polished and seamless user experience, DeepSeek V2 forces a crucial question: is that perfect polish worth the extra cost?

The choice highlights the great AI divide between different design philosophies. The best way to decide is to take a core task from your own workflow and calculate the cost. The answer will likely be surprisingly clear.

What matters more for your project: ultimate polish or ultimate efficiency? Share your thoughts in the comments below!